Every year, when Google I/O comes into town, our tech specialists start shaking with excitement. This year was no exception, so they took a digital seat at Google’s conference and pricked their ears. Luckily, our wizards of AI, AR & Mobile were kind enough to share their take on this year’s most exciting announcements. Here are 4 things you should remember from Google I/O 2021.

N°1: Moves in Mobile

The new Android 12 gets a fresh makeover with the new Material You. From now on, Android users can customize their system theme, which spreads out to apps as well. This can be a big improvement towards customizing apps for each individual user.

Google also doubles down on their UI frameworks: Flutter gets a new update, and Jetpack Compose gets a 1.0 release in July.

And if that isn’t enough, Google and Samsung are now working together closely on multiple fronts. Flutter can now run on Tizen OS, which again increases the device range that Flutter supports. Google’s Wear OS is getting a new update, basically merging with Tizen OS, while also coming to several Fitbit devices. This opens up new possibilities for creating Watch apps that are supported on a wider variety of smartwatches, while still using the default Android tools.

N°2: Vertex AI

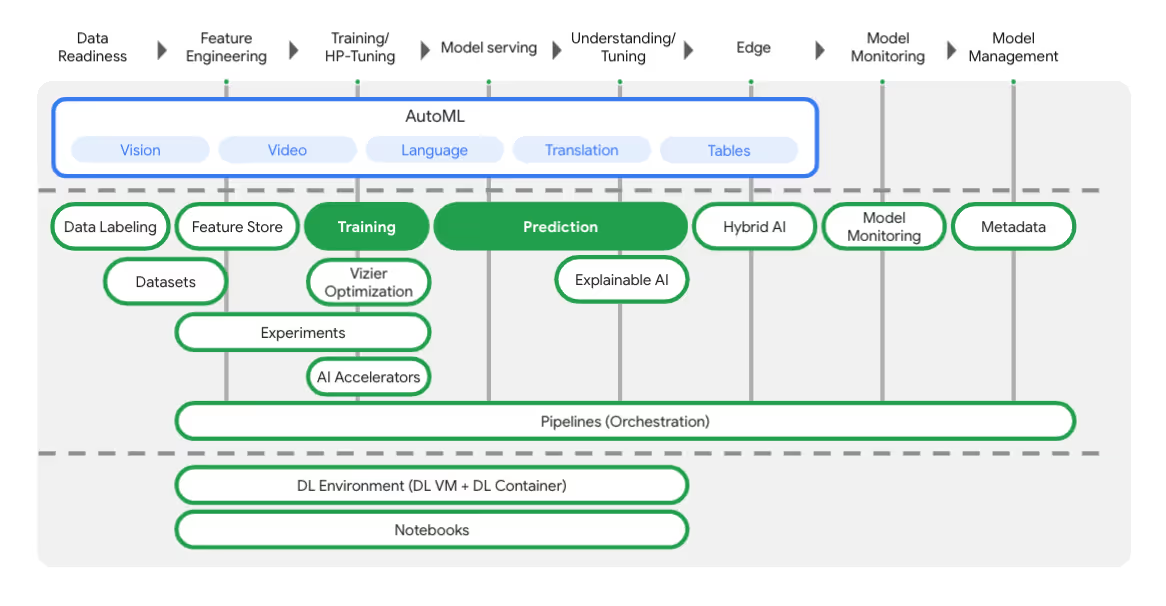

Vertex AI integrates Google Cloud's Machine Learning offerings into a seamless development experience. It comes with a single API for training AutoML & custom models and tightens together MLOps best practices like features stores, metadata store & orchestration pipelines. The image below shows how Google arranges all these services in a ML lifecycle.

So, what does this mean? According to Google, more standardization and tighter integration will probably lead to more frequent and robust deployments of ML-based features and products. Exciting news for all AI & ML lovers out there.

N°3: AI hardware

Staying in the realm of AI, Google had some other announcements to make. The TPU AI Hardware accelerator is up to the 4th generation, which can deliver more than one exaflop - far more than an average supercomputer - if you have the budget to pay for it. This will support the trend of training ever larger neural networks and language models like laMDA. It's only a matter of months before we’ll read about neural networks with over 1 trillion parameters.

Critical applications like drug discovery and climate modeling are even more hungry for computational power, and for that, we have to move into the realm of Quantum computing. A dedicated Quantum AI campus and data center was announced, bringing us one step closer to solving some of the most elusive problems.

N°4: Augmented Reality

After the rumours and speculations about Apple Glass, we definitely expected Google to announce some changes in the field of AR. And indeed they did.

First off, ARCore’s machine-learning based Depth API is getting an update, giving developers access to raw depth estimation data for more accurate environment modeling and occlusion. This will enable use cases similar to what is possible on LiDAR-equipped iPhones and iPads, but without any special hardware requirement. We do expect the overall quality and accuracy of ARCore’s AI-based solution to be a little less solid than what you’d get from an actual LiDAR sensor, but still impressive.

Google is also improving the lives of AR developers by adding session recording and playback API’s. It could solve one of the biggest bottlenecks in AR development, where an app needs to be deployed to a device and tested in a certain physical context every time a change is made. The update allows us to record an AR session once, including the camera feed and sensor information, and keep playing it back as the app gets changed and updated. This has the potential to be a huge timesaver during development and QA.

Honestly, we can't wait to see what's coming at us next year.

.avif)

.png)

.avif)