This summer, while we were busy serving up the latest bits of AI news on our LinkedIn feed, we were also knee-deep in the trenches, helping out our clients to utilise the power of Generative AI. And by doing the actual work, we’ve grown, learned and gathered new insights. So, grab a seat, and let’s dive into the Generative AI lessons learned over summer.

The big lesson: Think beyond the black box

You’re probably tired of the age-old cliché to “think outside the box”, but this time, we’re not talking metaphorically. We all know ChatGPT is the most advanced auto-complete tool out there, but here’s the kicker: without the right checks and balances in place, ChatGPT can sometimes generate unpredictable and incorrect responses. Especially when it swims in the deep waters of specialised or niche areas. And that’s where Retrieval Augmented Generation (RAG) struts in like a star.

RAG is a catch-all term for any technique that combines classical search, fetching the most relevant information, and the wizardry of state-of-the-art generation, delivering results that humans can understand. With RAG, we leverage the Natural Language Processing (NLP) power of GPT while getting the correct information outside of the “black box” of the model and reducing the risk of hallucinations and mistakes.

This recipe served up some obvious use cases, like our personalised chatbot ChatITP or a next-level search engine like Babel. These two models first search for relevant information before formulating an answer. But there are some more exotic cases as well.

For instance, picture this: automating translations for a niche industry like the legal world. Specific terms demand specific translations. Instead of tossing ChatGPT into the translation process, we armed ourselves with a database of historic translations and identified specific translation pairs. So when tricky translation decisions came up, we called in GPT to identify the correct translation taking the right context into account. These translations were fed back to the original legal text to create the most relevant translation possible.

Lessons in Product Design: Integrate, integrate, integrate

Creating shiny new tools is cool, but never forget: integrating existing ones is even cooler. During summer, we’ve done both for some of our clients. From standalone web apps to seamless integrations with existing tools that companies already are used to.

Think about it: a lot of GPT-based applications are essentially question-answering bots, and what’s more natural than making them integrate into the internal messaging tool that a company is using? For us, that meant introducing the ChatITP Slackbot. For a large food manufacturer, we implemented a semantic search tool and integrated it into their Google Chat.

Lessons in UX: Transparency & control for the win

We’ve been beating the drum about the combination of AI and UX for a while now (okay, maybe more than a while, possibly five times or so). But here’s the truth: transparency and user control are the secret sauce for winning your user’s trust in Generative AI applications.

Here are some tips on how to improve your UX in Generative AI applications:

- Clearly mark everything that’s generated by AI with a specific colour and/or label (“AI-Generated”)

- While AI generates its answer, keep your users engaged. Think loading icons or revealing answers word by word. Or gently nudge your users to ask questions with pre-baked answers in the FAQ database.

- Know when to form an answer based on the retrieved knowledge or whether to simply cite part of an original document. For example, when bureaucracy is at play, quoting the source is the safest thing to do.

- Peel back the curtain and let users see the AI’s gears in action. Show them the prompts sent to ChatGPT, or let them craft their own. In our legal translation case, we let users pick their translation pairs to fine-tune their prompt. Or you can also implement a UI slider to control how formal the text should be.

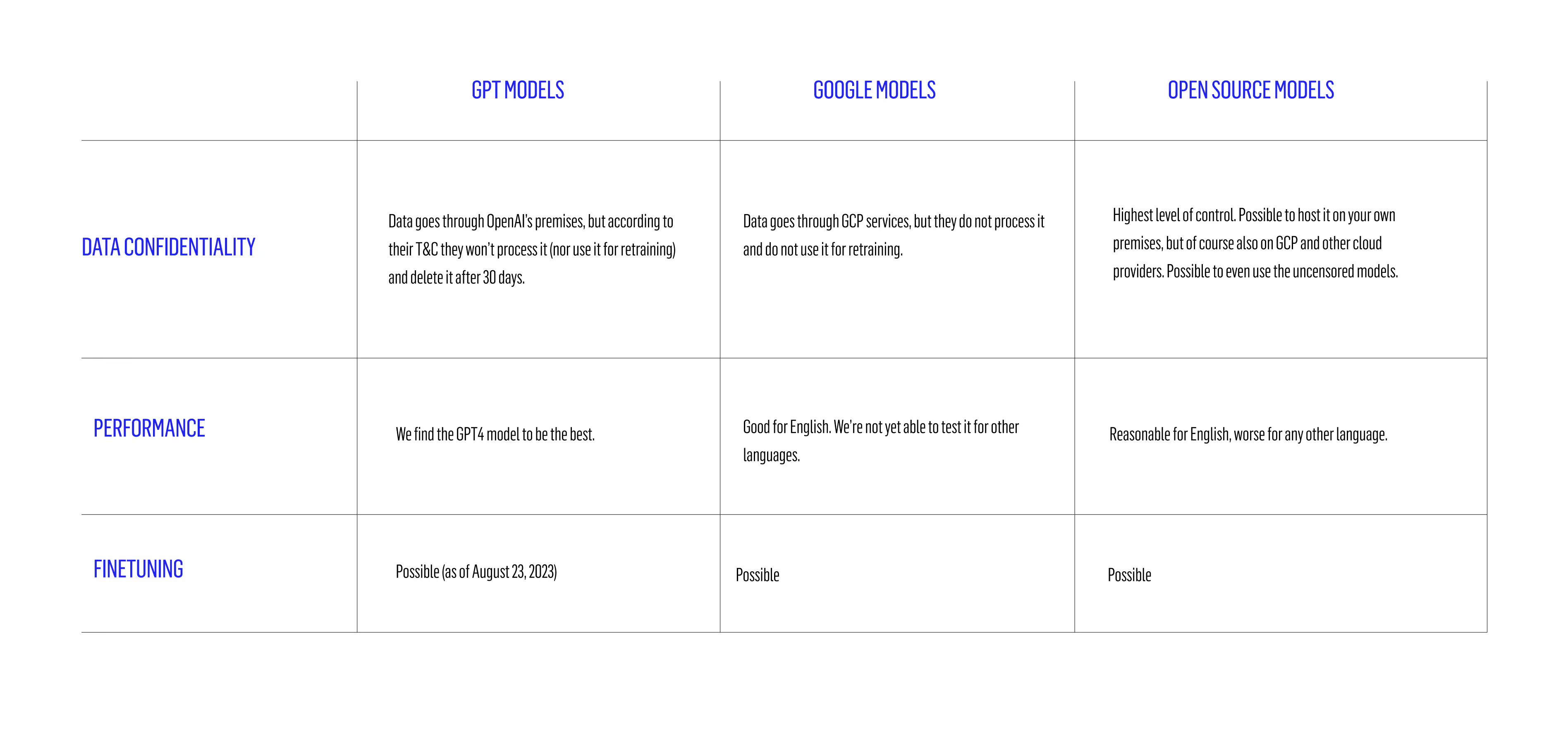

Lessons in Data Confidentiality: Mind the trade-offs

For some of our clients, it was crucial to focus on safeguarding sensitive data. They want to know exactly where their data roams and who has the right to process it. And rightly so.

We were pushed to venture beyond ChatGPT’s APIs and consider more secure options, like other cloud providers’ LLM APIs or hosting an LLM on-premises. We played matchmaker, considering the unique needs of each client and communicated clearly about the trade-offs.

Our default recommendation? GPT models, for their performance benefits. But if a client's data is as confidential as a government secret, we explore alternatives to GPT. We're always on the lookout for the latest updates; because these models evolve day after day.

Oh, and speaking of alternatives, AWS Bedrock has some fresh models. We haven't taken them for a spin yet, but you can bet we're itching to dive in!

Curious about what’s to come

The world of Gen AI is a wild ride, and we're strapped in, eager to explore every twist and turn. We're not just spectators; we're active participants, constantly evolving and adapting. So, there you have it – a tour through the lessons we've picked up while navigating the landscape of Generative AI. Stay tuned, because the adventure is far from over.

.avif)

.png)

.avif)