In my last tech blog post we talked about building an image classifier, trained on three different body parts, using a technique called transfer learning. When our model was trained, we transformed it into a Core ML model to run it on iPhone. We found that it worked great, and that our model predicted the image classes pretty much real-time. But how fast exactly, and how much faster does the model run on the new iPhones powered by the industry’s first 7nm chip, the A12 Bionic?

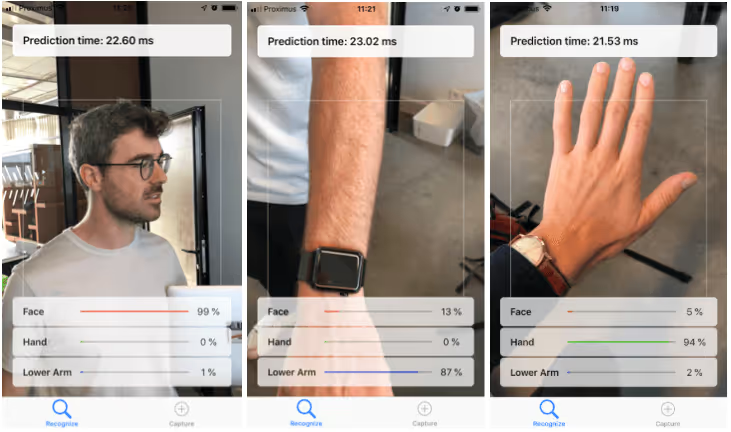

Our body part classifier is an excellent tool to benchmark ML (Machine Learning) performance between an iPhone 8 and the latest generation iPhone XS powered by the A12 Bionic chip. We’re deliberately skipping iPhone X because it’s no longer available for purchase. To have a performance metric, we’ve modified our app a little bit, so it displays the time it takes to return a prediction when a frame is fed into the trained model. This is displayed in the top of our app. We then tested the performance of our app on the two iPhones. As expected, the difference in speed was huge, but perhaps even bigger than anticipated.

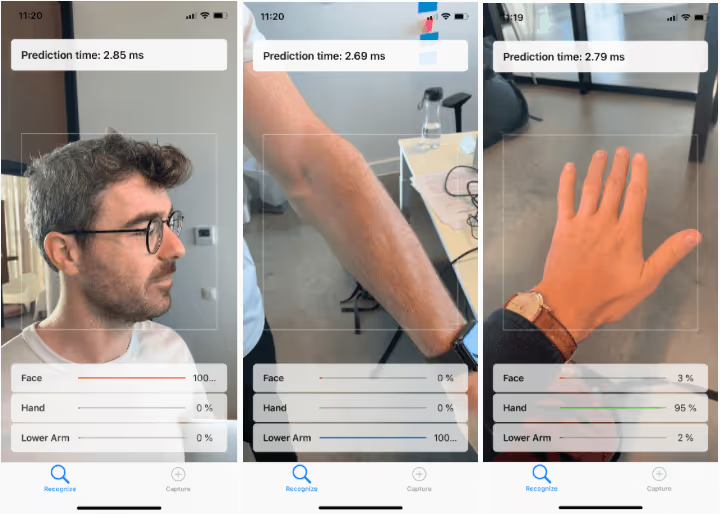

Let’s combine the average prediction time in a convenient barchart. For iPhone 8, the average prediction time is 22.38 ms, while iPhone XS does it in 2.77ms. That’s about one order of magnitude, which is a huge gain in just 1 year!

That said, with 22.38 ms/prediction, even iPhone 8 is still much faster than required for real-time predictions, since everything under 33 ms/prediction is effectively real-time, as iPhone captures video at 30 frames/s (1000 ms/30 frames = ~33 ms). Our trained model is very small though — it’s based on MobileNet v1 and only takes up 9 MB — so it’s safe to say it’s a lightweight model.

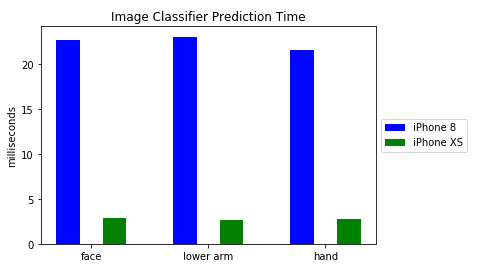

If we would alter the benchmark to a more complex model, we’re thinking of object detection here, the prediction times will obviously increase. In that case the computing power of iPhone XS comes in handy, and should enable a smooth real-time object-detection experience on a mobile device. Let’s put that to the test.

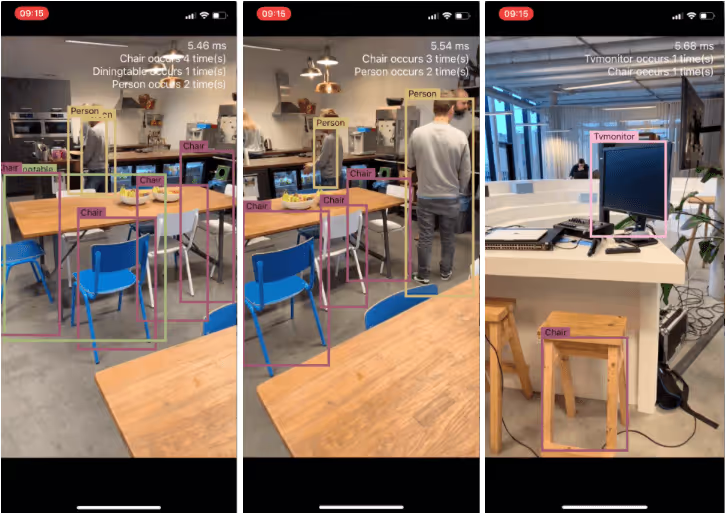

We’ve implemented a pre-trained object detection model, called TinyYOLO, into another iOS app and let our app display prediction times while performing object detection on the rear camera feed. TinyYOLO is a lightweight implementation of YOLO, trained on only 20 classification categories.

With an average prediction time of 38 ms, iPhone 8 struggles to make predictions real-time. Although, with a converted framerate of 26 frames/s, it’s still acceptable for real time predictions. iPhone XS, on the other hand, doesn’t seem to break a sweat at all, with an average prediction time of 5,6 ms.

This kind of performance increase in such a short time span is pretty impressive. Apple keeps improving hardware capabilities on iPhone, which results in performance increase. We see the impressive results on both our image classification and object detection applications. It’s safe to say that even more complex models will still scale to iPhone XS.

Of course Apple isn’t the only major company focussing on mobile implementation of Machine Learning models. Google’s Edge TPU also points in the direction of running machine learning models at your fingertips. On their website Google mentions that they are set to provide ‘possibilities to the demand of running low latency, cloud trained AI models at the edge’. To make this feasible, they’ve developed the first iteration of their Edge TPU mobile AI chip, claiming predictions at 30 frames per second on a high-resolution video in a power-efficient manner, using multiple state-of-the-art AI models.

In a next benchmark test, we would like to check out the performance of ML models on Android. We’re curious to see how it compares to iPhone’s performance. Since Android is a mobile operating system able to support many platforms, in contrast to iOS only supporting a couple of iPhone models, we’re wondering if the operating system, in conjunction with the hardware, will be as powerful a combination as is with Apple’s iPhone.

We at In The Pocket, are confident that there will be some form of AI in every app we release in the course of the next few years. Whether it’s in the form of computer vision, natural language processing, recommendation engines or simple classification problems. Big companies like Apple and Google keep leading the way to a future of AI, also on mobile, as well as the democratization of training and implementing Machine Learning models into your applications with products like Create ML from Apple and Google’s AutoML.

.avif)

.png)

.avif)