A few weeks ago, Amazon kicked off its annual re:Invent conference. Along with 500,000 others, we took a seat in the new virtual conference room and spread our ears. AWS re:Invent is one of the largest global cloud events, featuring keynote announcements, new launches, sessions and so on, so we just couldn’t miss out.

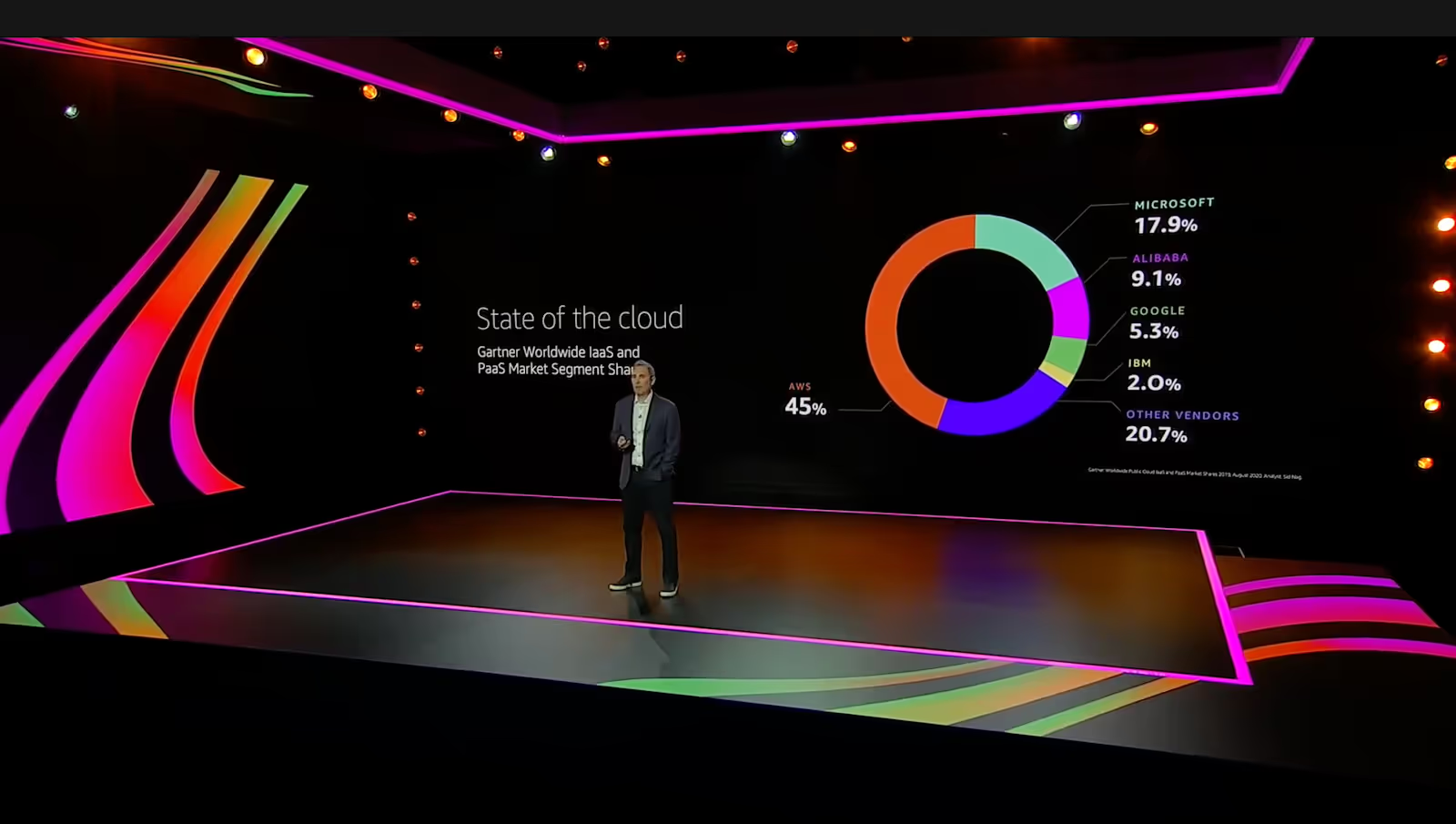

Andy Jassy (CEO AWS) opened the conference by stressing that the global pandemic is pushing companies to the cloud more than ever. And that’s a fact. The opening keynote continued with a range of product releases, updates, highlights and announcements. For those who missed out, we collected our personal highlights into a top 5 shortlist.

1. Amazon is bringing macOS to its AWS cloud

One of the most surprising announcements in the computing category definitely was the introduction of macOS on AWS Elastic Compute Cloud (EC2). These instances, built on Apple Mac mini computers, prove to be ideal for developing, building, testing, and signing applications on Apple devices, such as iPhone, iPad, iPod, Mac, Apple Watch, and Apple TV.

This opens a whole new world of capabilities. You will get the same benefits as regular EC2 instances. Just think of elasticity, scaling, management and cost reductions. We will keep a keen eye on this at In The Pocket as it might change the way we build and deliver Apple-based apps, one of our other specialties next to cloud-native development

An announcement like this can't’ go without a video.

2. Lambda changes duration billing granularity and launches Container Support

What are we talking about?

AWS Lambda is a serverless compute service that lets you run code without provisioning or managing servers. You can run code for virtually any type of application or backend service with zero administration. Just pay as you go.

In the AWS ecosystem, AWS Lambda quickly grows as the powerhouse for serverless computations. By adding a granularity of 1 millisecond for billing, it enables users to cut down on running costs. Especially in tasks that are very performant. As an effect, this encourages developers to write software as performant as possible, as it comes down to the millisecond. We’re certain this will have an impact on how we think about data flows and high throughput applications, as well as impacting the metrics teams are held accountable for.

Adding to the billing improvement, AWS now also enables developers to deploy custom docker containers on Lambda. No more need for spinning up clusters and all the operational overhead. It will be useful to include dependencies that were difficult to implement in the past because of the 250Mb package limit. Increasing the limit to 10GB will broaden Lambda’s workload use cases. Especially in the machine learning field, these changes will prove to be very useful.

3. AWS Proton

What are we talking about?

AWS Proton creates and manages standardized infrastructure and deployment tooling for developers and their serverless and container-based applications.

When developing a cloud solution in AWS, there is a broad range of computing solutions to choose from. In most cases, you would use a mix of Lambda and Fargate (a serverless computing engine for containers). This requires different ways to deploy artifacts (zip(s), docker container(s)). With Proton, AWS now has a managed service to perform and visualize these deployments. At In The Pocket, we currently deploy our artifacts using Terraform but we can see the promising potential of Proton. It will be certainly worth exploring if it can provide us with less operational overhead.

4. Amazon EKS Distro

What are we talking about?

- Amazon EKS gives you the flexibility to start, run and scale Kubernetes applications in the AWS cloud or on-premises.

- Amazon EKS Distro is a Kubernetes distribution used by Amazon EKS to help create reliable and secure clusters.

Another one worth mentioning is EKS Distro. With Distro, AWS now has a solution for on-premise computing. Using the EKS Distro allows running Kubernetes workloads on-site in the same configuration as you would in AWS. This comes in handy when on-premise computing is a strong requirement.

5. Amazon SageMaker Pipelines

What are we talking about?

Amazon SageMaker helps data scientists and developers to prepare, build, train, and deploy high-quality machine learning (ML) models quickly by bringing together a broad set of capabilities purpose-built for ML.

Last but not least, Amazon SageMaker pipelines bring DevOps capabilities to the machine learning playing field. It allows data scientists to build and automate machine learning pipelines as you would in non-machine learning environments by using infrastructure-as-code. In combination with SageMaker Studio, it adds an execution pipeline that will be loved by machine learing teams.

In conclusion

One thing is clear: there are a lot of new paths to explore and enough interesting insights to follow up on. Staying hungry, we can't wait to see what next year's event has in store.

.avif)

.png)

.avif)