You’re walking around in the office you’ve spent so many hours working hard, drinking coffee and playing pingpong with colleagues. All of a sudden, a threatening shadow appears. You turn around and see a huge yellow ball rolling towards you. He’s opening his big mouth, ready to eat you entirely, while you start running away. The yellow ball keeps following you, as if it’s a real human being. You can’t hide. You can’t escape. Pac-Man will come and get you.

The scene above might sound scary, but it’s a description of a game that could be developed thanks to something we’re researching: the mix between augmented reality and artificial intelligence. ‘We’, that’s the people of In The Pocket, Europe’s finest digital product studio. Our company consists of multiple teams, among which a team focussing on AR and one on AI. The first is working with Unity, while the latter knows all about TensorFlow. Recently they joined forces to create some magic.

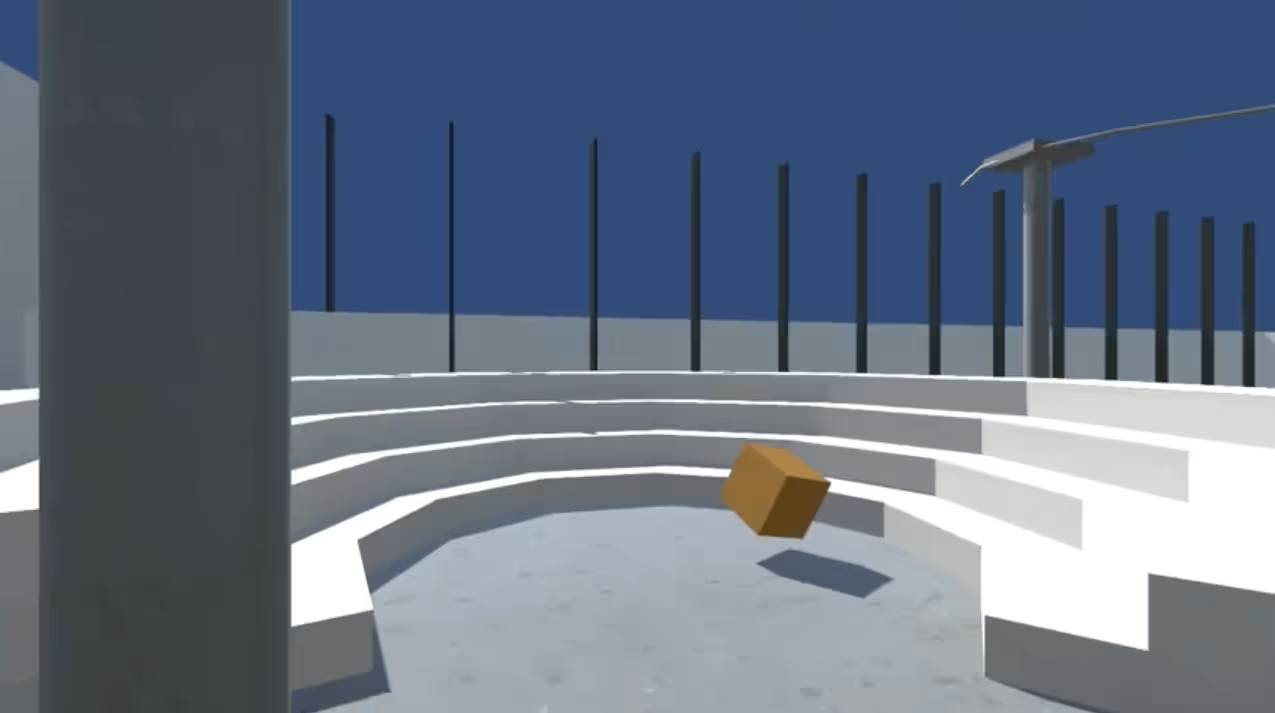

Our Augmented Reality team masters Unity - they had built a 3D model of our office for an internal demo for indoor navigation last summer. Two objects were added to this environment: a green toy tank and a yellow block. Our goal was easy: reward the tank to collide & capture the block positioned at random in our virtual office. To do this, we - and especially our intern Fabrice - have leveraged ml-agents from the folks at Unity. It's a great framework that helps you to create agents with machine learning methods in no time. State-of-the-art reinforcement learning algorithms like Proximal Policy Optimization with patience are readily available and proved to work great.

At first, the agent was a bit stupid and failed to get out of a room when it was dropped there at random. But after a couple of hours, it learned to wander out of the rooms, albeit hitting a lot of walls. We updated our reward function to punish the agent when it collides with a wall and continued training. The agent started catching the yellow blocks better and better! We noticed that it often missed the blocks when they were in sight but far away. So we increased the density of the raycast, and the agent again improved. Reinforcement Learning Kaizen!

Have a look at the final model, and how well it learned to navigate itself in our office:

Unity being a gaming platform, is really good at compiling these environments and agents onto mobile devices. Towards our goal of creating a AR Pacman running around in our office, we’ve compiled this environment onto iOS, just to see the tank in action catching blocks in our hallway. Here’s an impression of how that looks like:

The real deal

You might think that our creation is just for fun, but we’re dead serious about the potential of it. Imagine having something similar to guide you in a shop, airport or amusement park? It’s one thing to creating an AR wayfinding application, but it’s even nicer (and more scalable) if you can skip the human programming and let the agent train itself to your needs.

What’s really interesting, is that these agents can show human behaviour and patterns. The virtual robot might live in a model, but it knows the real world. Imagine if you’re developing a public building - whether it’s a hospital, train station or airport - and you organise an architectural design competition. One of the things our agent could do, is testing the accessibility and safety of the building by doing millions of simulated ‘walks’. Which roads to people take? Where do they get stuck? What about the crowd control in dangerous situations?

The biggest caveat at the moment is that the Agent lives in the 3D world, and does not sense the real world. Our desks are not yet modeled in the Unity environment, so the tank just drives through tables if you watch it with a HoloLens or another AR device. We’re thinking and exploring how we can push back 3D meshing information of the environment in the Unity model. We’re making the tank more robust by dropping random obstacles in the 3D environment so it learns to bypass them effectively, anticipating the live changes in the real world. While observing the Agent and manually tuning the reward function was fun, it threatens the scalability of virtual Agents learning themselves to navigate novel environments. We’re exploring new ways to approach multi-phased learning; getting the basics of navigating in our office right first, and mastering later how to be fast and avoid obstacles.

Obstacles don't have to stop you. If you run into a wall, don't turn around and give up. Figure out how to climb it, go through it, or work around it. Go ahead, AR Pac-Man, you’ve got all night long and ten million blocks ahead!

Special thanks to Fabrice and Dieter who helped us on this project.

.avif)

.png)

.avif)